Agentic AI can be described as any goal-oriented autonomous system that instructs AI models to gather the information and produce the outputs required to make decisions, take actions, adapt and learn. In basic terms, instead of a human user prompting a system, agentic AI uses AI to prompt itself.

Often, systems that use agentic AI will still require some level of human supervision, however the necessary level of human supervision might decrease with time as confidence in the system’s performance improves.

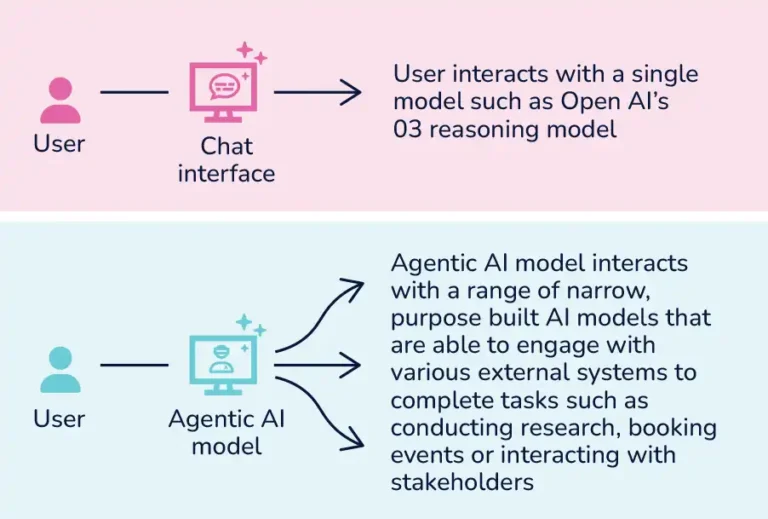

Chat interface vs. Agentic AI

Agentic AI reduces the skills needed to effectively leverage AI. This will result in increased adoption across various industries, especially for highly skilled professionals who manage complex workflows, such as lawyers.

When generative AI-powered chatbots like ChatGPT were first released, it was thought that lawyers and other professionals would need to learn prompt engineering to be able to utilize AI. However, if agentic AI develops as expected, much of this will be handled by technical teams building prompt engineering into AI systems, lessening the value of regular end users developing these skills. What is currently a human decision of when and how to prompt AI will instead be completed by an AI model, which will decide how to instruct an ensemble of further models to achieve the desired result.

Because interacting with agentic AI will be much easier than interacting with a tool like ChatGPT to automate processes, adopting systems that use it will accelerate the pace of AI adoption in organizations, especially for legal teams with complex, dynamic processes.

“Instead of a human user prompting a system, agentic AI prompts itself.”

Automating simple processes made up of a predetermined sequence of tasks is already achievable by using logic to piece together responses from different AI models and pass information along, but this approach falls down when complex reasoning is required to determine the next best step.

Many in-house legal processes are very complex. They often require lawyers to analyze new information received, consider a range of competing factors and seek out new pieces of information from disparate sources.

Current technology helps lawyers with some of the straightforward ‘doing’ part of their work, such as drafting documents, summarising contracts, reviewing documents for relevance or privilege, or even writing emails. Agentic AI has the potential to manage the process and decide how to complete a series of tasks to reach a goal. This will be vastly more efficient, especially for processes that call for a blend of decisions to be made, content to be generated and actions taken.

In-house legal teams are tasked with reducing risk but are often not pulled in for their advice until after the fact when it’s too late. Agentic AI presents an opportunity for in-house legal teams to enable stakeholders across the business in sales, marketing or product to utilize legal much more frequently for early, instant advice on matters.

Where traditionally in- house legal have received much of their work through intake channels such as email, instant messages or conversations, streamlining intake through an AI-powered portal or channel will enable them to effectively leverage AI to source the most relevant information and use that to provide advice on simple queries. This will free up legal to focus on the highest-value matters while retaining visibility over everything else.

Agentic AI is perfectly suited for this type of workflow. Using an agentic model to coordinate could allow the legal team to improve accuracy and maintain control by, for example:

• Setting guardrails that require the AI to proactively get approval before responding to certain matters; or

• Utilizing a number of narrower AI models that have access to specific predefined datasets that are purpose- built for tasks such as drafting emails, reviewing NDAs, or approving marketing material.

AI is already used extensively in due diligence to review bulk sets of contracts, extract and analyze key clauses and produce reports. However, there are many other components to due diligence that require lawyer input, such as facilitating the document collection, interpreting the data, collaborating across teams to understand the impact of potential risks or red flags, producing project updates and making decisions.

While today the barriers to accessing AI through a chat interface would likely prevent a lawyer from using it to generate project updates, send reminder emails to stakeholders or analyze extracted contract data, having a system that used agentic AI to automatically complete these tasks would remove many of those barriers, improve the quality of the output and potentially streamline the entire process.

Traditional machine learning and generative AI are currently being used for contract review solutions, but agentic AI offers a compelling value add to automate the entire process. While solutions today are largely built to help with the specific task of reviewing a third-party contract against an in-house legal team’s agreed playbook more efficiently, much of the time that goes into contract review is in the other wraparound tasks such as communicating to the internal owner a timeline for delivering the work, saving document versions into a CLM, communicating with subject matter experts across the business on different issues, compiling emails to respond to the counterparty outlining the key issues, providing regular updates and getting consensus on the acceptable level of risk given the nature and size of the opportunity.

A system could use agentic AI to automatically call narrower AI models to draft an email to the counterparty and update to stakeholders based on the redlines the lawyer produces, make an estimate on the timeline for signing the contract based on how far apart the parties are on key issues, and put time in diaries to connect with key stakeholders in the infosec or procurement teams based on any issues identified in the contract review.

“There is growing demand for technical professionals to work in legal, and agentic AI will accelerate that.”

There is growing demand for technical professionals to work in legal, and agentic AI will accelerate that. Taking the responsibility of completing tasks based on the outputs from AI models away from the end user and instead having the user approve decisions made by a model that is autonomously completing tasks, will remove some of the existing barriers to AI adoption and make it much easier for lawyers to use, but also create a lot more work for those building the systems.

Creating any AI system requires a lot of relevant, good quality and clean data. In legal, this data is often difficult to “There is growing demand for technical professionals to work in legal, and agentic AI will accelerate that.” source because decisions made by lawyers consider many factors, depend on extraneous information that an AI model may not have access to, and sometimes may not be decided consistently across the team.

Take, for example, a legal team who want to build an agentic AI model to orchestrate deciding whether the copy in marketing material is legally compliant or not. The data required to make that decision would include many previous examples of marketing copy, as well as whether that copy was approved or not along with the reasons why.

It could also include the relevant contracts for any customers who were referenced in the copy, and the email chain or transcript where they provided the quoted content. It may also need access to notes and conversations from the product team and any testing that had been completed to determine whether the claims in the material could be verified or not. All of this data would be incredibly difficult to compile and make available to an agentic AI model.

We know that prompting AI models to generate accurate results is a skill that requires time and persistence to develop, and so building agentic AI models that can themselves accurately prompt AI models will require teams with a unique combination of technical and legal expertise. Individuals with a complementary skill set such as data science, process automation or computer science who can leverage technology to build systems to perform legal tasks will be in high demand.

Agentic AI is another step in the direction of in-house legal teams transitioning from being busy and reactive advisors who spend the bulk of their time responding to issues to strategic and proactive enablers whose primary role is to set up frameworks and policies that will facilitate the wider business to operate in a way that accounts for and reduces legal risk where sensible.

For lawyers, the initial focus should be on building the skills that will help them work with technologists to turn their deep legal expertise into scalable, reusable legal products. Lifting lawyers out of the day-to-day grind of actually doing the work will free up time for legal teams to engage with strategies for proactively anticipating and mitigating legal risks to an organization.

For the wider organization, transitioning to using technology to complete the bulk of the day-to-day legal requests will empower teams to get quicker and more consistent legal advice and likely accelerate the pace of business, especially for low-risk deals.

As lawyers, it’s crucial that we begin engaging with and learning about agentic AI – so that we can begin to understand both its potential and its limitations.